|

Getting your Trinity Audio player ready...

|

Editor’s note: This article follows “The Staging Dichotomy” series as a technical deep dive into data migration.

eBay connects millions of buyers and sellers in more than 190 markets around the world to create economic opportunity for everyone, everywhere. As we continue to innovate on behalf of our customers, our team is focused on improving the velocity of our software delivery, which ultimately will enable us to ship features faster to our customers.

As mentioned in “The Staging Dichotomy” article, to achieve higher velocity, a stable and predictable staging environment is paramount. It all boils down to having high quality test data and a robust staging infrastructure, which becomes the foundation for testing thousands of features across the site in a scalable and efficient way.

Over the last several months, we have improved staging data quality by copying a subset of production data, anonymizing and moving it to the staging environment making it available to test any test case combinations.

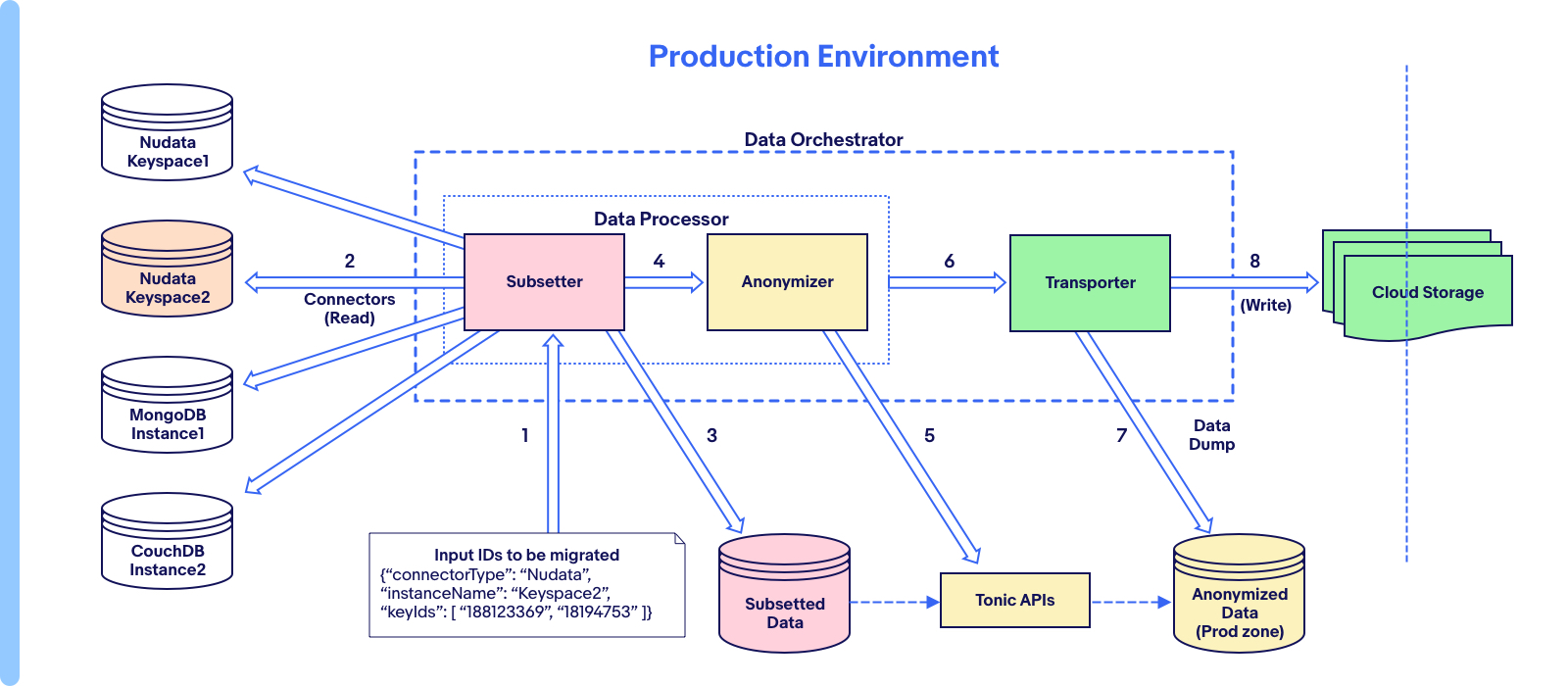

In this article, we will see the detailed system architecture of our scalable NoSQL data migration system, which helps in copying any given set of keys data from its NoSQL Production instance (source) to the corresponding staging instance (destination). The core data orchestrator is set up as a Jenkins pipeline in both production and staging environments to copy and write the data.

Data Orchestrator

Data orchestrator contains two main components as shown in below system diagram:

- Data processor

- Transporter

Data processor is a REST service which takes in the input keys that need to be subsetted from the production database. It also takes in the connector type and database instance name to determine which particular production database it has to connect to. Based on the connector context, the connector factory creates the right connector instance like NuData or MongoDB connector. These connector instances have the overridden methods to read and insert documents into that type of database. Connectors also use the right set of data converters implemented to convert data between BSON, JSON string, object node and NuData key document. This made our design easier to manage any new database types to be added and supported by the data pipeline with very minimal changes of adding new connector and data conversion types. The rest of the data processor system and the pipeline functions are the same for all types of databases.

Data Orchestrator on the Production Side

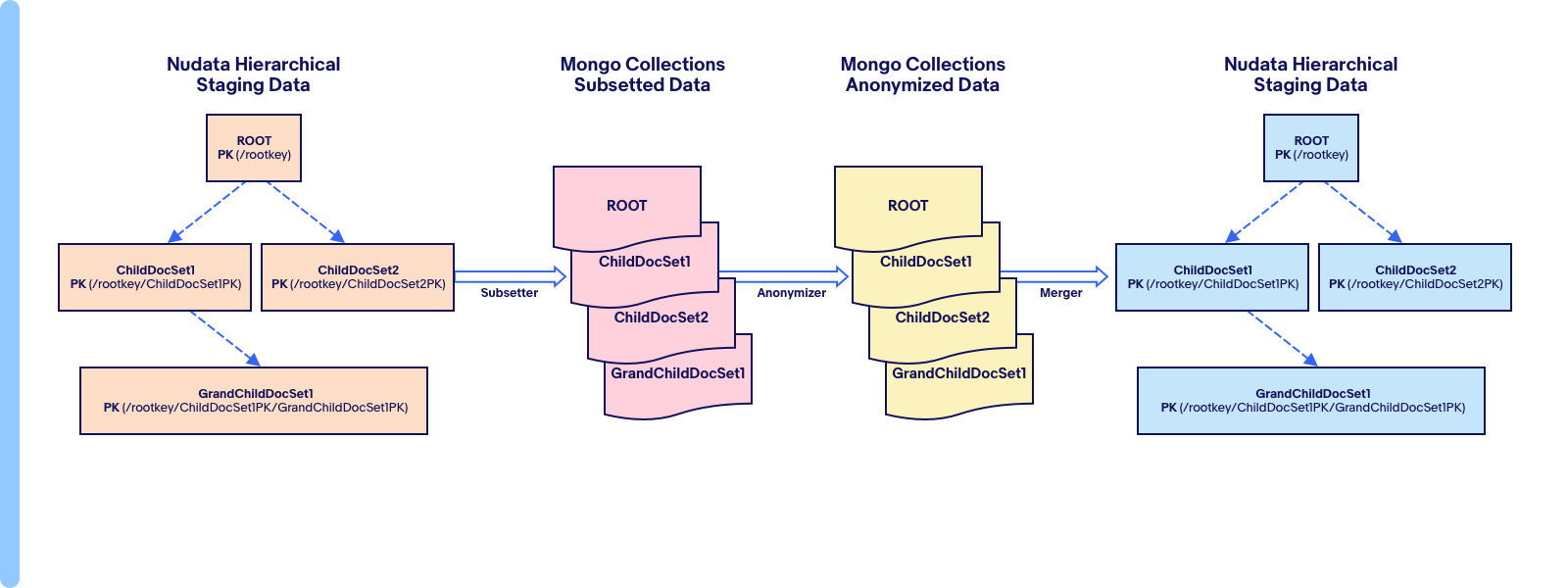

The subsetter uses the connectors to read the documents and data from the production database and writes the subsetted data into an intermediate Mongo database as collections. The below diagram shows an example of a NuData hierarchical data model in production, and how after subsetting, it’s converted into multiple individual collections to hold the group of similar documents.

These subsetted Mongo collections are then anonymized using Tonic API’s which in turn creates respective workspaces for each of these collections. When a new database instance is anonymized for the first time, there won’t be any security anonymization rules set up for that database instance workspaces. So, a set of workspaces named “security” supporting all the collections of this new database instance are created and any necessary anonymization rules will be added and then reviewed by the security team. Once reviewed and approved, this “security” workspace is cloned to be the “main” workspace for each collection, and it will be used for any data to be copied from this database instance going forward. Every run of the data orchestrator will have the workspaces created for data anonymization with its run id name having all the approved rules from “main” workspace.

After anonymization, the transporter component in the production side takes the Mongo dump of the anonymized data and writes it to cloud storage.

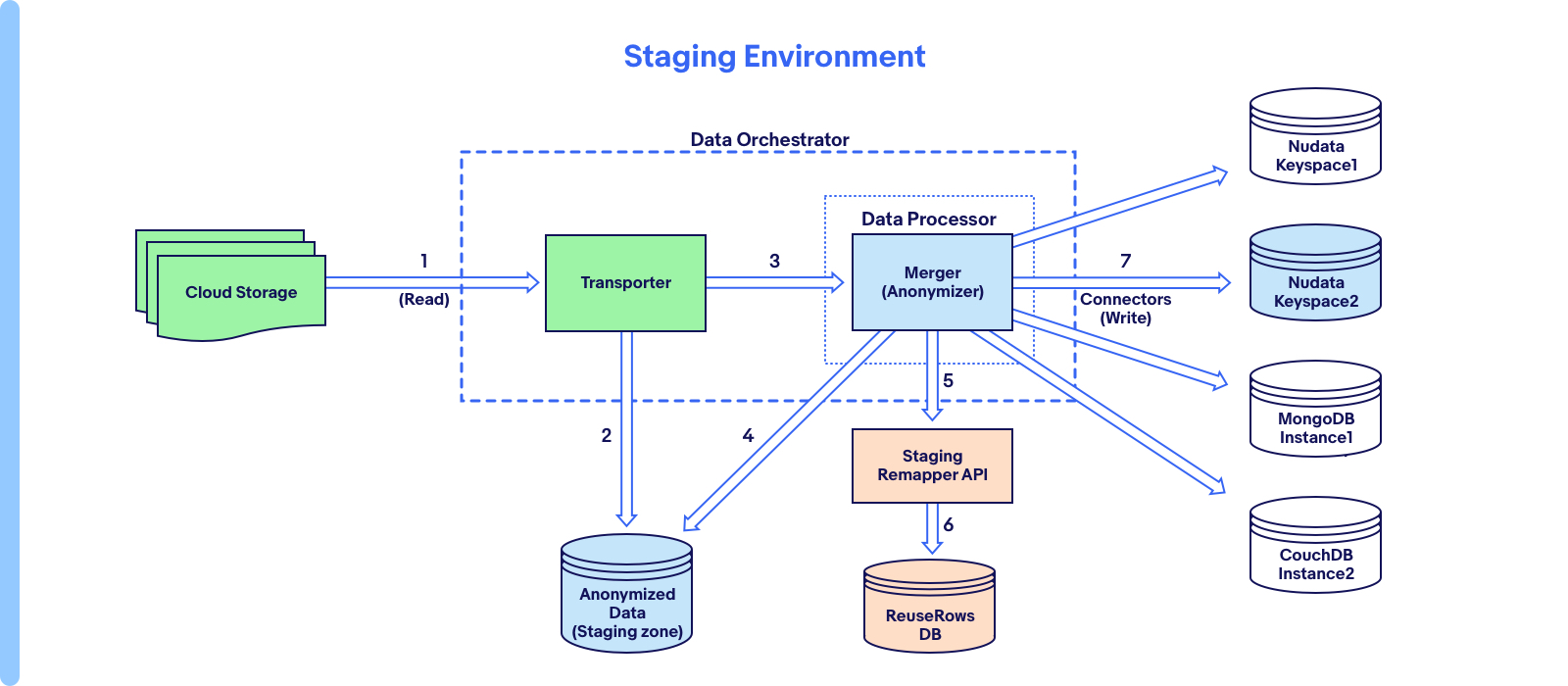

Data Orchestrator on the Staging Side

The data orchestrator on the staging side keeps polling for new data written to cloud storage and downloads them. Finally, it writes to an intermediate database in the staging zone as shown below.

Once the anonymized data is in the staging zone database, the data orchestrator calls the merger REST API which in turn reads all collections of anonymized data. Now, the merger takes every document and processes them using Staging remapper API. The staging remapper has the mapping data of any previously anonymized IDs to the corresponding staging inserted IDs. For example, any item or seller ID which got previously copied from production to staging should result in the same ID so that data is not duplicated every time the pipeline tries to copy it. This helps greatly in only appending the latest information of a particular item or adding more items from the same seller. These staging IDs are mostly Oracle DB sequence IDs generated in staging tables while running the Oracle data migration pipeline. Once the anonymized IDs in the document are remapped to the staging IDs, the merger inserts the data into the staging db. Again, any duplicates on the keys won’t be inserted. This is by design that any previously migrated data should not be updated since it would have been modified for some specific testing needs after it was migrated.

Challenges

- The nested JSON data anonymization was complex to handle when only a part of the data had to be anonymized. For example: Changing the number in NuData key /__ROOT__:(LONG, 330318110603)/StorePolicies:(STR, SHIPPING). Special anonymization and remapper rules are created to handle such scenarios.

- Anonymized IDs which are not found in the staging remapper had to be collected and required us to rerun the Oracle data pipeline to first migrate that missing data. Maintaining this feedback loop becomes critical to have the data integrity in place.

- Data type conversions had unique challenges when converting hierarchical keys to JSON and BSON objects. Converters with data splitters to handle special characters in hierarchical keys are used.

- There was no efficient way to retrieve an entire document tree at all levels in a hierarchical dataset. New APIs were added to NuData database to retrieve the entire hierarchical data based on the given root keys.

- Debugging any failures took additional time for our team to work on. Request context containing the key IDs and status information for each stage of the pipeline were captured and saved as run history for debugging and references.

Next Steps

This data migration pipeline has helped us copying many complex data from production to test all possible scenarios. We have developed pipelines supporting both Oracle and NoSQL databases. The NoSQL data pipeline was designed to be generic so it could expand to any database. Looking ahead, we are working on expanding it to CouchDB and ClickHouse databases.