We’re taking part in Copyright Week, a series of actions and discussions supporting key principles that should guide copyright policy. Every day this week, various groups are taking on different elements of copyright law and policy, and addressing what’s at stake, and what we need to do to make sure that copyright promotes creativity and innovation.

Long before generative AI, copyright holders warned that new technologies for reading and analyzing information would destroy creativity. Internet search engines, they argued, were infringement machines—tools that copied copyrighted works at scale without permission. As they had with earlier information technologies like the photocopier and the VCR, copyright owners sued.

Courts disagreed. They recognized that copying works in order to understand, index, and locate information is a classic fair use—and a necessary condition for a free and open internet.

Today, the same argument is being recycled against AI. It’s whether copyright owners should be allowed to control how others analyze, reuse, and build on existing works.

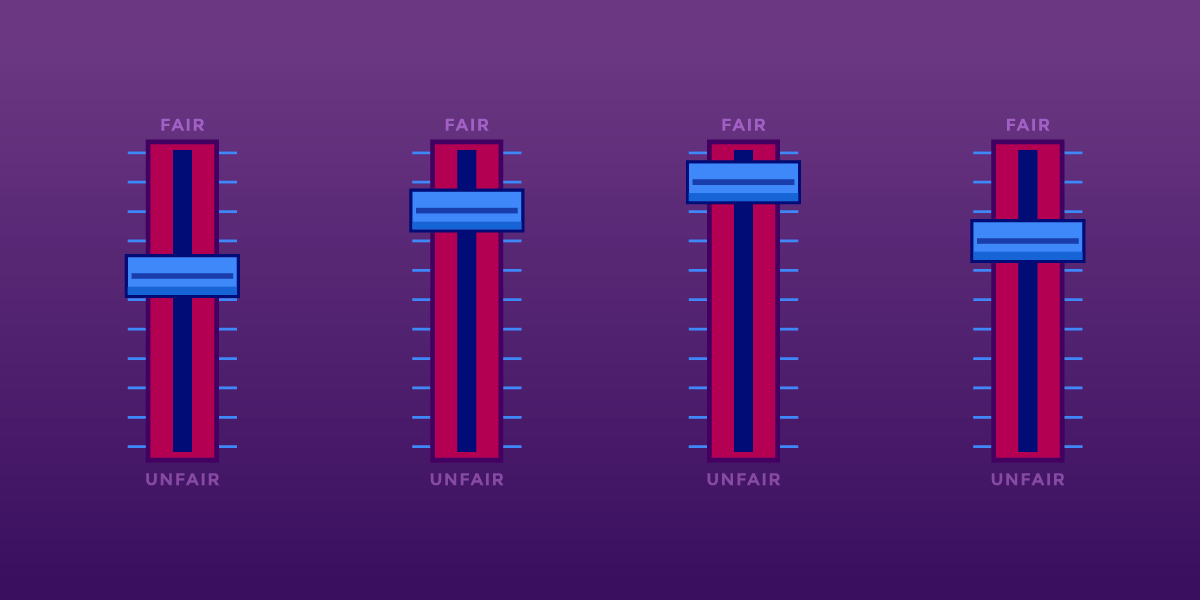

Fair Use Protects Analysis—Even When It’s Automated

U.S. courts have long recognized that copying for purposes of analysis, indexing, and learning is a classic fair use. That principle didn’t originate with artificial intelligence. It doesn’t disappear just because the processes are performed by a machine.

Copying that works in order to understand them, extract information from them, or make them searchable is transformative and lawful. That’s why search engines can index the web, libraries can make digital indexes, and researchers can analyze large collections of text and data without negotiating licenses from millions of rightsholders. These uses don’t substitute for the original works; they enable new forms of knowledge and expression.

Training AI models fits squarely within that tradition. An AI system learns by analyzing patterns across many works. The purpose of that copying is not to reproduce or replace the original texts, but to extract statistical relationships that allow the AI system to generate new outputs. That is the hallmark of a transformative use.

Attacking AI training on copyright grounds misunderstands what’s at stake. If copyright law is expanded to require permission for analyzing or learning from existing works, the damage won’t be limited to generative AI tools. It could threaten long-standing practices in machine learning and text-and-data mining that underpin research in science, medicine, and technology.

Researchers already rely on fair use to analyze massive datasets such as scientific literature. Requiring licenses for these uses would often be impractical or impossible, and it would advantage only the largest companies with the money to negotiate blanket deals. Fair use exists to prevent copyright from becoming a barrier to understanding the world. The law has protected learning before. It should continue to do so now, even when that learning is automated.

A Road Forward For AI Training And Fair Use

One court has already shown how these cases should be analyzed. In Bartz v. Anthropic, the court found that using copyrighted works to train an AI model is a highly transformative use. Training is a kind of studying how language works—not about reproducing or supplanting the original books. Any harm to the market for the original works was speculative.

The court in Bartz rejected the idea that an AI model might infringe because, in some abstract sense, its output competes with existing works. While EFF disagrees with other parts of the decision, the court’s ruling on AI training and fair use offers a good approach. Courts should focus on whether training is transformative and non-substitutive, not on fear-based speculation about how a new tool could affect someone’s market share.

AI Can Create Problems, But Expanding Copyright Is the Wrong Fix

Workers’ concerns about automation and displacement are real and should not be ignored. But copyright is the wrong tool to address them. Managing economic transitions and protecting workers during turbulent times may be core functions of government, but copyright law doesn’t help with that task in the slightest. Expanding copyright control over learning and analysis won’t stop new forms of worker automation—it never has. But it will distort copyright law and undermine free expression.

Broad licensing mandates may also do harm by entrenching the current biggest incumbent companies. Only the largest tech firms can afford to negotiate massive licensing deals covering millions of works. Smaller developers, research teams, nonprofits, and open-source projects will all get locked out. Copyright expansion won’t restrain Big Tech—it will give it a new advantage.

Fair Use Still Matters

Learning from prior work is foundational to free expression. Rightsholders cannot be allowed to control it. Courts have rejected that move before, and they should do so again.

Search, indexing, and analysis didn’t destroy creativity. Nor did the photocopier, nor the VCR. They expanded speech, access to knowledge, and participation in culture. Artificial intelligence raises hard new questions, but fair use remains the right starting point for thinking about training.