|

Getting your Trinity Audio player ready...

|

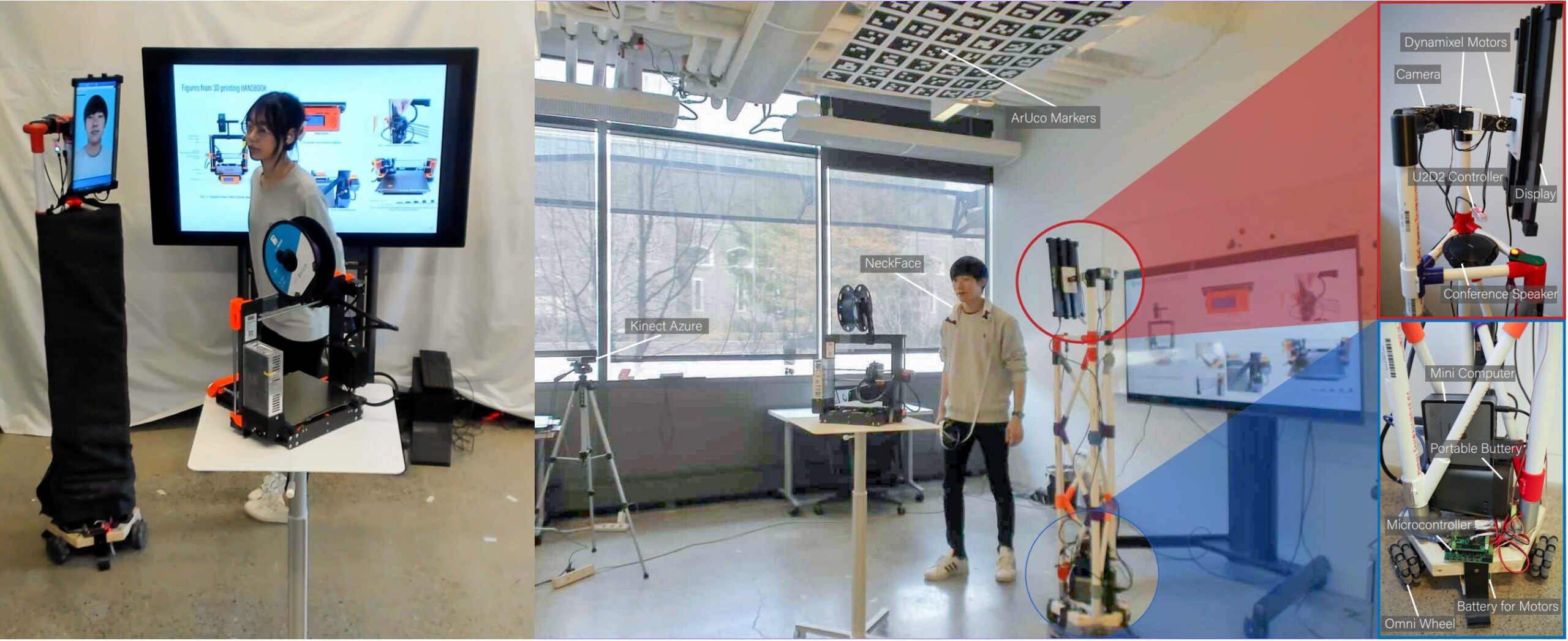

Cornell University researchers have developed a robot, called ReMotion, that occupies physical space on a remote user’s behalf, automatically mirroring the user’s movements in real time and conveying key body language that is lost in standard virtual environments.

“Pointing gestures, the perception of another’s gaze, intuitively knowing where someone’s attention is—in remote settings, we lose these nonverbal, implicit cues that are very important for carrying out design activities,” said Mose Sakashita, a doctoral student of information science.

Sakashita is the lead author of “ReMotion: Supporting Remote Collaboration in Open Space with Automatic Robotic Embodiment,” which he presented at the Association for Computing Machinery CHI Conference on Human Factors in Computing Systems in Hamburg, Germany. “With ReMotion, we show that we can enable rapid, dynamic interactions through the help of a mobile, automated robot.”

The lean, nearly six-foot-tall device is outfitted with a monitor for a head, omnidirectional wheels for feet and game-engine software for brains. It automatically mirrors the remote user’s movements—thanks to another Cornell-made device, NeckFace, which the remote user wears to track head and body movements. The motion data is then sent remotely to the ReMotion robot in real-time.

Telepresence robots are not new, but remote users generally need to steer them manually, distracting from the task at hand, researchers said. Other options such as virtual reality and mixed reality collaboration can also require an active role from the user and headsets may limit peripheral awareness, researchers added.

In a small study, nearly all participants reported having a better connection with their remote teammates when using ReMotion compared to an existing telerobotic system. Participants also reported significantly higher shared attention among remote collaborators.

In its current form, ReMotion only works with two users in a one-on-one remote environment, and each user must occupy physical spaces of identical size and layout. In future work, ReMotion developers intend to explore asymmetrical scenarios, like a single remote team member collaborating virtually via ReMotion with multiple teammates in a larger room.

With further development, Sakashita says ReMotion could be deployed in virtual collaborative environments as well as in classrooms and other educational settings.

The paper is also published as part of the Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems.

More information:

Mose Sakashita et al, ReMotion: Supporting Remote Collaboration in Open Space with Automatic Robotic Embodiment, Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (2023). DOI: 10.1145/3544548.3580699

Citation:

Robotic proxy brings remote users to life in real time (2023, May 11)

retrieved 15 May 2023

from https://techxplore.com/news/2023-05-robotic-proxy-remote-users-life.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.