Governments increasingly rely on algorithmic systems to support consequential assessments and determinations about people’s lives, from judging eligibility for social assistance to trying to predict crime and criminals. Latin America is no exception. With the use of artificial intelligence (AI) posing human rights challenges in the region, EFF released today the report Inter-American Standards and State Use of AI for Rights-Affecting Determinations in Latin America: Human Rights Implications and Operational Framework.

This report draws on international human rights law, particularly standards from the Inter-American Human Rights System, to provide guidance on what state institutions must look out for when assessing whether and how to adopt artificial intelligence AI and automated decision-making (ADM) systems for determinations that can affect people’s rights.

We organized the report’s content and testimonies on current challenges from civil society experts on the ground in our project landing page.

AI-based Systems Implicate Human Rights

The report comes amid deployment of AI/ADM-based systems by Latin American state institutions for services and decision-making that affects human rights. Colombians must undergo classification from Sisbén, which measures their degree of poverty and vulnerability, if they want to access social protection programs. News reports in Brazil have once again flagged the problems and perils of Córtex, an algorithmic-powered surveillance system that cross-references various state databases with wide reach and poor controls. Risk-assessment systems seeking to predict school dropout, children’s rights violations or teenage pregnancy have integrated government related programs in countries like México, Chile, and Argentina. Different courts in the region have also implemented AI-based tools for a varied range of tasks.

EFF’s report aims to address two primary concerns: opacity and lack of human rights protections in state AI-based decision-making. Algorithmic systems are often deployed by state bodies in ways that obscure how decisions are made, leaving affected individuals with little understanding or recourse.

Additionally, these systems can exacerbate existing inequalities, disproportionately impacting marginalized communities without providing adequate avenues for redress. The lack of public participation in the development and implementation of these systems further undermines democratic governance, as affected groups are often excluded from meaningful decision-making processes relating to government adoption and use of these technologies.

This is at odds with the human rights protections most Latin American countries are required to uphold. A majority of states have committed to comply with the American Convention on Human Rights and the Protocol of San Salvador. Under these international instruments, they have the duty to respect human rights and prevent violations from occurring. States’ responsibilities before international human rights law as guarantor of rights, and people and social groups as rights holders—entitled to call for them and participate—are two basic tenets that must guide any legitimate use of AI/ADM systems by state institutions for consequential decision-making, as we underscore in the report.

Inter-American Human Rights Framework

Building off extensive research of Inter-American Commission on Human Rights’ reports and Inter-American Court of Human Rights’ decisions and advisory opinions, we devise human rights implications and an operational framework for their due consideration in government use of algorithmic systems.

We detail what states’ commitments under the Inter-American System mean when state bodies decide to implement AI/ADM technologies for rights-based determinations. We explain why this adoption must fulfill necessary and proportionate principles, and what this entails. We underscore what it means to have a human rights approach to state AI-based policies, including crucial redlines for not moving ahead with their deployment.

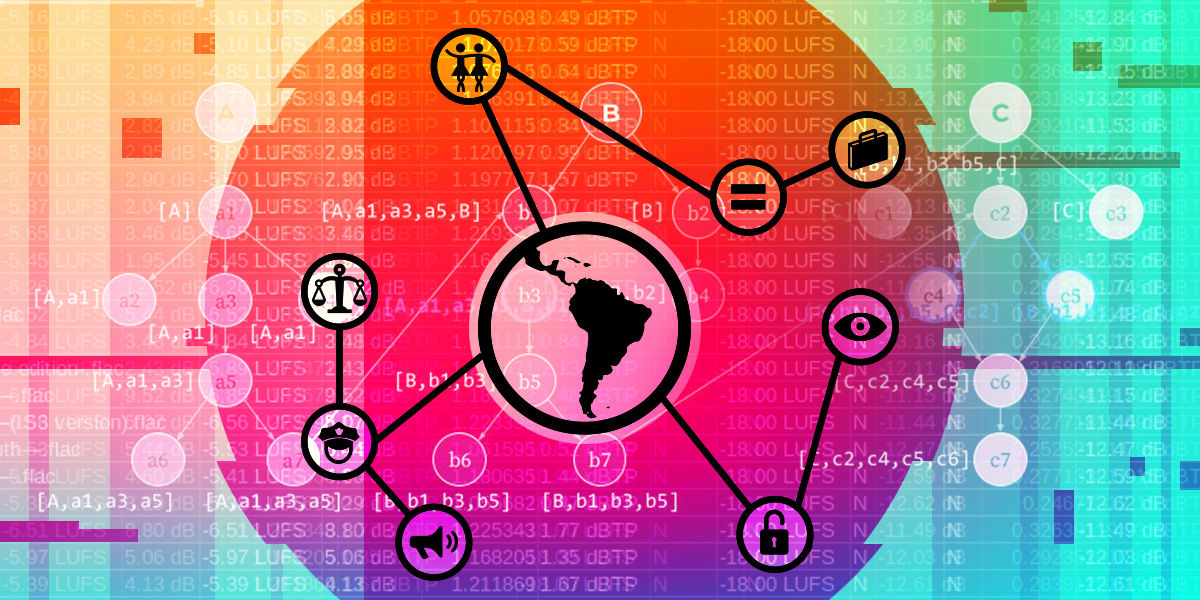

We elaborate on what states must observe to ensure critical rights in line with Inter-American standards. We look particularly at political participation, access to information, equality and non-discrimination, due process, privacy and data protection, freedoms of expression, association and assembly, and the right to a dignified life in connection to social, economic, and cultural rights.

Some of them embody principles that must cut across the different stages of AI-based policies or initiatives—from scoping the problem state bodies seek to address and assessing whether algorithmic systems can reliably and effectively contribute to achieving its goals, to continuously monitoring and evaluating their implementation.

These cross-cutting principles integrate the comprehensive operational framework we provide in the report for governments and civil society advocates in the region.

Transparency, Due Process, and Data Privacy Are Vital

Our report’s recommendations reinforce that states must ensure transparency at every stage of AI deployment. Governments must provide clear information about how these systems function, including the categories of data processed, performance metrics, and details of the decision-making flow, including human and machine interaction.

It is also essential to disclose important aspects of how they were designed, such as details on the model’s training and testing datasets. Moreover, decisions based on AI/ADM systems must have a clear, reasoned, and coherent justification. Without such transparency, people cannot effectively understand or challenge the decisions being made about them, and the risk of unchecked rights violations increases.

Leveraging due process guarantees is also covered. The report highlights that decisions made by AI systems often lack the transparency needed for individuals to challenge them. The lack of human oversight in these processes can lead to arbitrary or unjust outcomes. Ensuring that affected individuals have the right to challenge AI-driven decisions through accessible legal mechanisms and meaningful human review is a critical step in aligning AI use with human rights standards.

Transparency and due process relate to ensuring people can fully enjoy the rights that unfold from informational self-determination, including the right to know what data about them are contained in state records, where the data came from, and how it’s being processed.

The Inter-American Court recently recognized informational self-determination as an autonomous right protected by the American Convention. It grants individuals the power to decide when and to what extent aspects of their private life can be revealed, including their personal information. It is intrinsically connected to the free development of one’s personality, and any limitations must be legally established, and necessary and proportionate to achieve a legitimate goal.

Ensuring Meaningful Public Participation

Social participation is another cornerstone of the report’s recommendations. We emphasize that marginalized groups, who are most likely to be negatively affected by AI and ADM systems, must have a voice in how these systems are developed and used. Participatory mechanisms must not be mere box-checking exercises and are vital for ensuring that algorithmic-based initiatives do not reinforce discrimination or violate rights. Human Rights Impact Assessments and independent auditing are important vectors for meaningful participation and should be used during all stages of planning and deployment.

Robust legal safeguards, appropriate institutional structures, and effective oversight, often neglected, are underlying conditions for any legitimate government use of AI for rights-based determinations. As AI continues to play an increasingly significant role in public life, the findings and recommendations of this report are crucial. Our aim is to make a timely and compelling contribution for a human rights-centric approach to the use of AI/ADM in public decision-making.

We’d like to thank the consultant Rafaela Cavalcanti de Alcântara for her work on this report, and Clarice Tavares, Jamila Venturini, Joan López Solano, Patricia Díaz Charquero, Priscilla Ruiz Guillén, Raquel Rachid, and Tomás Pomar for their insights and feedback to the report.