|

Getting your Trinity Audio player ready...

|

When BBC journalist Rory Carson sought online consultations for a potential mental health issue, three private clinics diagnosed him with attention deficit hyperactivity disorder (ADHD). They charged between £685 and £1,095 for these consultations, which lasted between 45 and 100 minutes, and all prescribed him medication.

ADHD is a highly controversial disorder which emerged in the US in the late 1950s during the cold war, and quickly became associated with stimulant drugs such as Ritalin. Now diagnosed throughout the world, ADHD is central to many debates about neurodiversity.

While Carson’s Panorama investigation into its treatment attracted plenty of criticism, the fact that this disorder could apparently be diagnosed quite casually online is concerning. When he subsequently had a more rigorous (but free) three-hour, in-person consultation with an NHS psychiatrist, he was told that he did not, in fact, have ADHD.

Across the world, we’re seeing unprecedented levels of mental illness at all ages, from children to the very old – with huge costs to families, communities and economies. In this series, we investigate what’s causing this crisis, and report on the latest research to improve people’s mental health at all stages of life.

Society’s increasing awareness of mental health issues and demand for mental health support has been driven, in part, by social media and easier access to information online. While this is no bad thing in many ways, the related increase in self-diagnosis (including among children and adolescents) is clearly open to abuse by some organisations offering costly diagnoses and treatments.

But there is another reason for this rapid growth in private mental healthcare. In England alone, the NHS spends around £2 billion per year on private hospital care for mental health patients – equating to 13.5% of its total mental health spend. Due to the reduction in NHS bed provision, nine out of ten privately-run mental health beds are now filled by NHS patients.

While the UK government says it is committed to spending more money on mental health, private investment companies are reportedly queuing up to “seize the opportunities offered up to them by the NHS crisis”. Private providers say they can do more to help avert a mental health emergency exacerbated by the COVID pandemic, yet a dozen of the 80-odd privately-run mental health hospitals in England were rated as “inadequate” in the Care Quality Commission’s latest report, which has warned of possible closures.

As a health historian, I find our worsening mental health crisis sadly predictable. Governments around the world have been involved in tackling mental illness since at least the early 19th century. While not all of their attempts were successful, many important lessons remain unlearned.

At the heart of them is this: amid ageing populations and the spiralling costs of mental illness to national economies, investing in people’s future mental health, based on what the key socioeconomic factors that we know are underlying it, is the only effective, long-term way to reduce this burden. As a major coalition of UK mental health organisations recently reported:

The risks to mental health, and the poor outcomes that follow, do not fall evenly across the population. People living in poverty, those with physical disabilities and illnesses, people with neurodevelopmental conditions, children in care, people from racialised communities, and LGBTQ+ people all experience much poorer mental health outcomes because of intersecting disadvantage and discrimination.

This all adds up to the life expectancy of a person with a severe mental illness being about 20 years shorter than someone without a diagnosis – and that gap is getting bigger. We understand the reasons why – so why do we seem unable to do anything about it?

Learning from history: the emergence of asylums

The first asylum in Britain was Bethlehem Hospital near London’s Bishopsgate, which began to specialise in insanity by the 15th century. Commonly referred to as “Bedlam”, what is now Bethlem Royal Hospital was often depicted negatively – including in A Rake’s Progress, a series of eight paintings by the 18th-century English artist William Hogarth.

Wikimedia

Across the Atlantic, the treatment of patients in American asylums also proved very controversial. When Ebenezer Haskell escaped the Pennsylvania Hospital for the Insane in 1868, he immediately sued the hospital for unjust confinement and published an account of his ordeal, writing in the foreword:

The object of these pages is … simply to speak a few plain unvarnished truths [on] behalf of the poor, helpless and suffering patients put in these [institutions], and to show why a strong and positive legislative action should be taken for their protection.

The pamphlet included depictions of Haskell being punished and tortured, sometimes in the guise of treatment. In one, he is shown naked and lying on his back on the floor, restrained by four men while another performs “hydrotherapy” – dumping a bucket of water on Haskell’s face as a second man stands ready with another bucket.

Public perceptions of the brutal forms of care provided in mental asylums – and private “madhouses” – continue to be heavily influenced by films such as Shutter Island (2010), Girl, Interrupted (1999) and, perhaps most notably, One Flew Over the Cuckoo’s Nest (1975). Such films, and the novels that inspired them, portray asylums as harsh, unforgiving places run by mostly callous or sadistic staff. While this is justified in some cases, such portrayals mask the impressive ambition, care and expense that went into the building of many asylums by governments around the world during the 19th century.

The provision of care for the mentally ill has long been considered a public responsibility. In Britain, the 1774 Madhouses Act was a response to concerns about abuse in private madhouses. Soon after, the County Asylum Act of 1808 and Lunacy Act of 1845 were passed in England and Wales to create dedicated public facilities for the mentally ill, so they wouldn’t languish in workhouses. Dozens of asylums began popping up all over Britain, regulated by the newly established Lunacy Commission.

Encouraged by the Age of Enlightenment, which spurred the idea that science could solve most of the world’s problems, Britain was among the pioneers embracing the concept of public health, with governments investing in public infrastructure to prevent infectious disease. In the case of asylums, little expense was spared even for so-called “pauper lunatics”.

At this time, asylums would have been among the most impressive buildings people would have seen – overshadowed only by cathedrals. However controversial, they were the first concerted, state-led effort to deal with mental illness. And while few mental health experts would recommend a return to the asylum era today, they might well envy the commitment that governments in Britain and elsewhere demonstrated in the facilities they provided for their mentally ill.

John Eveson/Alamy

The disease that linked mental illness to poverty

Nineteenth-century experts provided numerous explanations for insanity. Some, such as masturbation, we would laugh at today. But financial insecurity, overstudy and overwork, or problems related to giving birth seem much more reasonable and still relevant. Just as heredity was cited as a cause in the past, today we cite genetic predisposition.

As governments began to invest more in hospital infrastructure to treat physical ills, due in particular to advancements in germ theory and surgery, asylum buildings and care standards were often left to deteriorate. In Alabama’s Mount Vernon Insane Hospital, for example, scandal surrounded the death of 57 African-American patients in 1906. But the cause of these deaths, pellagra – a disease that can affect the brain and cause severe psychiatric symptoms – has an important place in the history of public mental health treatment.

Patafisik via Wikimedia, CC BY-NC-SA

In northern Italy from the 1850s and the American South from the 1900s, asylums were suddenly filling up with pellagra sufferers. At this time, the disease was thought to be hereditary or contagious, and those afflicted, known as pellagrins, were shunned.

In fact, the real reason they were succumbing to pellagra was poverty. In both regions, landowners had introduced corn due to its high yields and attractiveness as a cash crop. At the same time, in Italy, a deterioration in agricultural working conditions meant that, by the 1870s, many workers relied on cheap corn for food in the form of polenta.

Similarly, in the post-Civil War American South, landowners devoted most of their property to growing cotton, leaving little room for other crops or livestock. So, tenant farmers relied on corn for food in the form of grits or corn pone, which left many suffering from malnutrition and, in particular, a severe deficiency of vitamin B3 (niacin).

This was the real cause of pellagra – but at this time, the role of vitamins in health was little understood. And even when the link between people’s over-reliance on corn in their diets, lack of niacin and mental illness was established by scientists, policymakers were hesitant to acknowledge the role of poverty and malnutrition in this explosion of mental illness.

In the US, New York physician Joseph Goldberger discovered the link between pellagra and poor diet in the mid-1910s – yet the overwhelming evidence he provided was rejected in the American South. For nearly 20 years, southerners were too proud to accept the disease was rooted in poverty, and continued to conduct fruitless research on other causes.

Even today, knowing that a poor diet contributes to poor mental health is one thing; tackling the poverty that leads to a bad diet is quite another. As researchers crystallise the link between diet and mental health – now widely framed in terms of the gut-brain axis – the need for governments to tackle the social determinants of a poor diet is clear and urgent. Namely, poverty and the food insecurity that goes with it.

When governments got serious about prevention

In 1929, a 13-year-old girl turned up to a Chicago social service agency, reporting that she had been raped by her brother-in-law. After a medical examination, her case was taken on by a team of social workers who visited her and her family, all Polish immigrants. The social workers took note of the family’s financial circumstances and helped the family press charges against the rapist, who was given a prison sentence. The girl attended counselling sessions for many months.

The agency overseeing this case was one of hundreds of mental hygiene and child guidance clinics founded in the US during its “Progressive Era” in the early 20th century. This was a period of political reform and social activism dedicated to countering the problems associated with industrialisation, urbanisation and immigration, and these child guidance and mental hygiene movements soon spread to Britain and elsewhere.

This article is part of Conversation Insights

The Insights team generates long-form journalism derived from interdisciplinary research. The team is working with academics from different backgrounds who have been engaged in projects aimed at tackling societal and scientific challenges.

Prevention was the cornerstone of these movements, which espoused that it was much more efficient to prevent mental illness than treat it. In the US, the clinics were often funded by charities such as the Commonwealth Fund and the Laura Spelman Rockefeller Fund. But the state played an important role too – more so in other parts of the world.

In Britain, social welfare departments established to run similar clinics began to hire new types of mental health worker, such as psychiatric social workers and psychiatric nurses. From the 1930s, education authorities became more involved in child guidance activities, which were included in the 1944 Education Act.

While some conclusions drawn at this time appear shocking today – some mental hygienists and child guiders, for example, were sympathetic to eugenic explanations for mental illness, even if they also acknowledged the role of environmental causes – overall, the existence of child guidance and mental hygiene during the first half of the 20th century demonstrates how seriously preventive mental health was taken.

Today, this is not the case. As in most areas of healthcare, the majority of public and private funding for mental health is funnelled towards researching and prescribing pharmaceutical treatments, rather than prevention.

Such investment has resulted in some effective medications, such as drugs to reduce the symptoms of schizophrenia or bi-polar disorder – although there are heated debates about this. But it has also distracted from the need to prevent upstream causes of mental illness, while pharmaceutical companies continue to aggressively lobby governments and politicians in the UK, US and elsewhere for more funding.

The peak of care in the community

In 1948, American journalist Albert Deutsch’s landmark book The Shame of the States exposed the parlous position of state-run mental hospitals throughout the US. In contrast to the good intentions that had led to the asylum era, Deutsch showed that many of these hospitals were now under-resourced, overcrowded and poorly staffed institutions characterised by deprivation, violence and abuse.

Around the same time, social psychiatry research was confirming what reformers had long believed: that poor socioeconomic conditions were a significant factor in the mental illness suffered by millions of people.

Dissatisfaction with mental hospitals and faith in psychiatry’s ability to prevent mental illness led to the community mental health movement. Proponents had two main arguments: that the mentally ill were best treated in their home communities, and that such illness could largely be prevented through community intervention.

In the US and elsewhere, political will for radical change was strong. In February 1963, President John F. Kennedy argued that prevention should be central to the US’s approach to mental illness, highlighting the “harsh environmental conditions” in which it flourished. This momentum culminated in the 1963 Community Mental Health Act – the first time the US federal government had invested significantly in mental healthcare. Its ambition was to replace the traditional asylum system with some 2,000 community mental health centres, designed to both provide treatment and engage in preventive work. Fewer than 800 were ultimately built.

Not every psychiatrist wanted to work in community mental health, so other mental health workers were recruited including social workers, psychologists, nurses and “indigenous paraprofessionals” – people from the local community who lacked formal mental health qualifications. They worked closely with members of the public to help resolve the socioeconomic problems that were fuelling their poor mental health, and also liaised with schools, landlords, welfare officers, the justice system and medical professionals on behalf of their patients.

Yet despite their effectiveness, indigenous paraprofessionals were often an awkward fit within community mental health centres. In New York’s South Bronx neighbourhood, for example, their attempts to unionise, receive training and be respected resulted in rising tensions with the professional healthcare staff. Racism was one of the contributing factors, as most of these paraprofessionals were black or Latinx, while most of the professional staff were white.

In 1969, the South Bronx paraprofessionals went so far as to lock out their centre’s managers and run it themselves for more than two weeks, supported by the Black Panther Party – which further irked the management. While they eventually agreed to some of the paraprofessionals’ demands, the underlying tensions were not resolved and, when funding for community mental health decreased, the budgets for paraprofessionals were the first to be cut.

By 1970, little preventive activity was occurring in community mental health centres. It turned out that President Lyndon B. Johnson’s “war on poverty” was more focused on “improving” the poor than progressive structural reform. Many social psychiatrists agreed that disadvantaged people needed to be “transformed” into upstanding citizens, rather than given material resources. This centuries-old idea of deserving and undeserving poor persists today throughout most of the world.

In the US, an increasing number of mentally ill people became homeless. Others ended up in prison or in nursing homes, while an increasing number were cared for by family members. In short, this marked a gradual return to the situation prior to the asylum era, when there was little public support for the mentally ill.

A shift towards treating the individual

The rise and fall of community mental health in the US is a cautionary tale. In the UK too, history shows that preventive approaches to mental health are soon weakened if not accompanied by genuinely progressive social policies that reduce poverty, inequality, racism, social isolation and community disintegration.

Following the election of US president Ronald Reagan in 1981 with a promise to reduce the role of government in most areas including healthcare and social support, and not long after his political soulmate Margaret Thatcher had come to power in the UK, the community mental health movement lost all momentum on both sides of the Atlantic.

But there was another reason for this: the publication, in 1980, of the third edition of the Diagnostic and Statistical Manual of Mental Disorders (DSM-III). This “bible of psychiatry”, published by the American Psychiatric Association (a new edition emerges roughly every couple of decades), determines what constitutes a psychiatric disorder and how to diagnose it. In the US, if you want your psychiatric treatment covered by health insurance, you must be diagnosed with a disorder found in DSM.

Its third edition marked a major shift away from addressing mental health at a population-wide level, in favour of a focus on individual mental disorders. This led psychiatrists and patients away from environmental explanations for mental illness towards genetic and neurological explanations, or biological psychiatry.

This shift was mirrored by the rise of psychopharmacology – the ever-growing use of drug therapies to treat psychiatric patients. Faith in these medications – in particular, antidepressants such as Prozac – further reduced demands for preventive psychiatry. A 2011 study found that antidepressant use in the US roughly quadrupled over two decades from 1998. More recently, antidepressant use in England rose by 35% between 2015 and 2021.

But psychopharmacology has not proved the panacea the pharmaceutical companies promised. One major study in 2022 found that only around 15% of participants in randomised, placebo-controlled trials experienced a substantial antidepressant effect. The fact that long-term use of antidepressants is likely to cause side effects, such as weight gain and sexual dysfunction, also raises questions about their widespread use.

The opioid crisis in the US indicates that we cannot rely on pharmaceutical companies to always do what is in our best interest. Sadly, it has also showed us that millions of Americans, and countless millions more around the world, are struggling to cope with mental as well as physical pain, and are desperate for solutions.

The dangers of privatised mental healthcare

In the UK, US and most other countries, there has probably never been more awareness of mental health issues among the general public – particularly in the wake of the COVID pandemic, whose impact on mental health has often led media headlines and dominated scientific discussions.

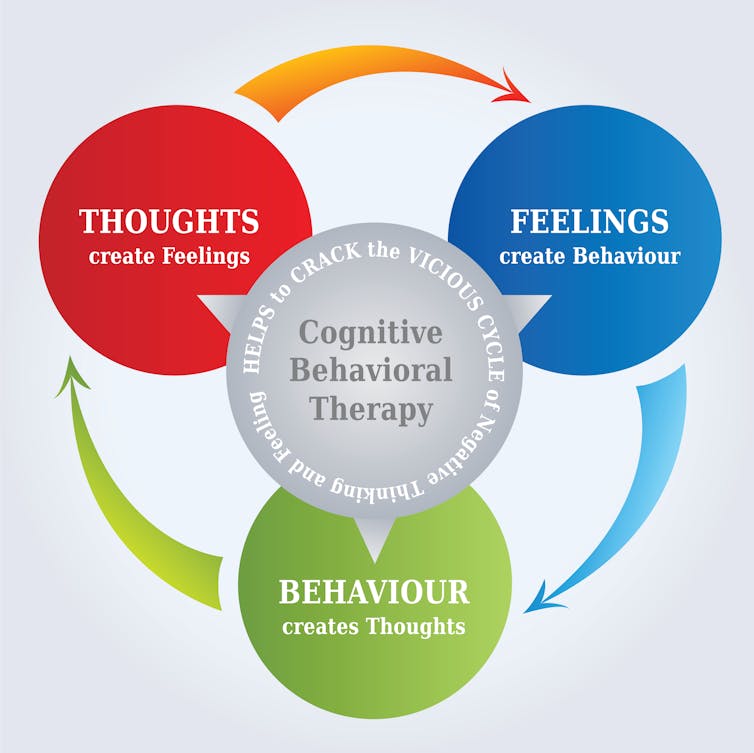

There is also much better evidence for what works – including, for example, the efficacy of talking therapies. In part because of concerns about the overprescription and ineffectiveness of drugs used to treat mental illness, the 21st century has seen a growth in popularity of talking therapies such as cognitive behavioural therapy (CBT) in the west.

artellia/Shutterstock

However, accessing state-provided psychoanalytic treatments is very difficult, particularly in less well-off regions. In the UK, average waiting times for the NHS’s Talking Therapies programme vary enormously depending on where you live. There are nearly three times as many NHS consultant psychiatrists per 100,000 people in parts of London than there are in Yorkshire. Vulnerable children in some parts of the UK can wait two years for a first appointment, while those elsewhere are seen within a week.

Overall, while the number of successful referrals for talking therapies such as CBT have increased since the NHS programme’s inception in 2008, so have demands, and it has recently been missing its targets by about a third. As a result, an increasing number of people are reported to be seeking private treatment – despite the expense, and amid concerns about the reliability of some services offering these treatments.

As with physical health services, people also opt out of the NHS by purchasing private health insurance or waiting list “fast passes”. All of this creates a two-tier system that undermines the principles of universality and accessibility that are meant to underpin the NHS.

Most private mental health providers, like drug companies, are also motivated by profit and the demands of their shareholders. It is not in their interest to invest in preventive strategies with long-term, non-commoditisable outcomes. Equally, with people living longer and populations ageing rapidly, the future cost of not investing in preventive mental healthcare that makes a difference across whole populations will only grow with every year that passes. The onus is on governments to act now.

Three preventive strategies

The annual cost of mental illness to the UK economy is estimated to be at least £117.9 billion, or 5% of the UK’s annual GDP. Almost three-quarters of this cost is explained by the lost productivity of people living with mental health conditions and the unpaid, informal carers who look after them.

A new report by the children’s charity Barnardo’s has called for a national strategy for social prescribing, suggesting that “every pound spent on helping young people access activities and support in the community could save nearly twice as much in dealing with longer-term mental health problems”.

There are many different potential strategies that could be introduced. Here are three of my favoured options – based not only on new research into the social determinants of health, but also on historical approaches to preventive mental healthcare.

1. To address malnutrition, eradicate food inequality

Today, we are returning to an idea that physicians of the past would have taken for granted: that food is a major contributor to our brain’s health, as well as our body’s. New research on diet and mental health often centres on the “gut-brain axis”: a varied diet consisting of whole grains, legumes, nuts, seeds, fruits and vegetables is thought to provide the type of bacteria needed to maintain good gut-brain health.

But people in deprived communities often live in so-called “food deserts”, where most of the food available is highly processed, laden with chemicals and high in sugar, salt and fat. A bolder approach to food policy is needed that ensures everyone has access to healthy food – and the skills and means to prepare it.

During the first world war, national kitchens were established to provide people with inexpensive, healthy food in attractive communal settings. The return of such facilities would be welcome today amid the cost of living crisis – and they could also play a role in preventing mental illness.

Imperial War Museums via wikimedia

2. To address poverty, introduce universal basic income

Interest in universal basic income schemes (UBI) – which provide everyone with a guaranteed income with no conditions attached – surged during the pandemic, when many countries introduced furlough or other income replacement schemes. Although UBI pilots have rarely studied mental health specifically, there is still evidence that a secure and sufficient income improves the mental health of participants.

UBI could prevent mental illness in numerous ways – from alleviating the stress associated with financial insecurity and which can cause inflammation in the brain, to reducing so-called diseases of despair that are associated with rising inequality, including the damaging stigma associated with welfare benefits (and the stress for people who work in the welfare system as gatekeepers).

It would also show people currently working as unpaid carers that their labour is valued. Many people find that volunteering benefits their mental health, and the efforts of volunteers contribute significantly to our communities. But it is often a privilege for those with time and money. A UBI would empower everyone to contribute to rebuilding their communities.

3. To tackle depression and isolation, get in touch with nature

During the COVID lockdowns, many people remarked how spending time in nature was their salvation. This built on existing evidence about the positive impact nature can have on our mental health.

However, much like access to healthy food, not everyone has access to natural beauty. Governments could do a great deal to reduce this inequality – for example, by providing inexpensive or free public transportation to national parks and other places of natural beauty. A priority should be ensuring that children from deprived urban backgrounds have regular access to nature.

In addition, more can be done to create new areas of natural beauty while protecting existing areas. Schemes that tackle biodiversity loss and climate change would reduce the clear impact these issues have on some people’s mental health – in part because worries about the climate are also known to trigger anxiety and depression.

Governments have a critical role to play

Responsibility for mental health should not lie solely with the individual. Sure, most of us can do something to improve our own mental wellbeing. But our lifetime mental health course is largely determined by socioeconomic, genetic and other factors, such as exposure to traumatic events, that may be mostly out of our control.

As centuries of evidence have shown us, governments play a critical role in creating the socioeconomic conditions that determine the mental health of their citizens. Yet, relatively speaking, many are doing less to address this today than they were decades ago. Until and unless this changes, state health providers such as the NHS will never be able to cope with the resulting demand for individual treatments. Those fortunate enough to do so will turn to the private sector. But what about everyone else?

For you: more from our Insights series:

To hear about new Insights articles, join the hundreds of thousands of people who value The Conversation’s evidence-based news. Subscribe to our newsletter.