For any large online business, the platform is a foundational piece. eBay’s platform contains software frameworks and infrastructure in its backend. Because the platform is so important, updates are essential to keeping the applications — including fundamental operations like search and checkout — stable and reliable. At eBay, there are more than 3,000 site applications which serve the online business. These belong to different domains, including payment, selling, marketing tech and more. To keep those applications running efficiently, the platform requires continual, consistent updates, including framework and infrastructure upgrades.

It’s a big challenge, and one that becomes even more fraught given that we need to complete all application platform evolutions in a short period. On top of that, it’s important to make sure all of the dependency systems can work as normal after platform changes are implemented. To achieve this, some mere separated scripts or tools are not enough. A common automation solution which can cover all different scenarios with high efficiency is needed to support all of those vital applications.

The Platform

In general, the platform is composed of two parts. The first is the framework that the application code is based on; this framework provides all fundamental functionalities, like build-up request context, expose metrics, provide common libraries and so on. The second is the infrastructure that hosts the application. At eBay, this is the cloud system.

Platform evolution requires updating both parts; we need to upgrade the framework and create new infrastructure adoptions. These kinds of tasks are prone to error and take much manual effort to ensure high stability and availability.

A common automated solution is designed to handle the entire platform evolution process. It can do all of the preparation, execution and validation jobs automatically, which increases productivity significantly.

This solution is easy to extend; the architecture is designed as a pluginable and loose coupling pipeline, wherein each step in the pipeline is a standalone task which can be reused or cherry-picked. The architecture provides the functionality to build up a customized pipeline to adopt different scenarios, like a framework upgrade or a migration from an old infrastructure to a newer one.

Use Cases

The below diagram demonstrates some of the different applications based on a dedicated platform, all sitting in a cloud environment. There are different types of upgrades that we’d like to apply to all applications; in some cases that might be a framework upgrade, framework migration, cloud migration or something else.

All of these upgrades, which each initiate a different program, are grouped under the category of platform evolution. At eBay, these are near-continuous, due to the size and complexity of the marketplace. The upgrade of the framework version, on the other hand, occurs on a monthly basis. Let’s get into some typical use cases.

Framework Upgrade

This example involves a framework upgrade, like, say, upgrading framework from version a to version b. To apply the new version to all the applications relying on that framework, an automation pipeline is needed for each application to update code to the latest framework, build it out, test it and release it without issues. This kind of pipeline will contain three flows:

Framework Migration

The typical program is moving the legacy platform to the modern one. In the migration, the applications running on the legacy platform need to be moved to the new platform smoothly. The migration process requires the following flows:

-

Collect metadata collection

-

Set up new applications and corresponding pools

-

Migrate traffic

-

Keep monitoring after migration.

-

Decommission the pools that host the legacy platform.

Cloud Migration

In this example, eBay is moving cloud infrastructure from the virtual machine based openstratus to container-based Kubernetes. To embrace the new cloud infrastructure, the application development lifecycle will also be changed; we’ll have to replace the image-based manifest with a library-based manifest, the pod-based pool with a VM-based pool, and the software load balance with a new hardware load balance. To achieve the migration from the old cloud system to the new one, the following flows are required:

Solution

If automation depends on some separated scripts or tools that cannot guarantee the quality of platform level change, there needs to be an overall automation solution to achieve the platform evolution. To figure out a solution that can build up the automated pipeline with enough flexibility to support different platform evolution cases, it became clear that we would need the following features:

-

Support for different kinds of workflow, like metadata collection, workloads setup and traffic migration

-

Common tasks must be able to be shared

-

Each workflow must be able to contain several tasks

-

Each task must interact with the ecosystem to process actions

-

Workflow and tasks need to be easily managed and orchestrated

Architecture

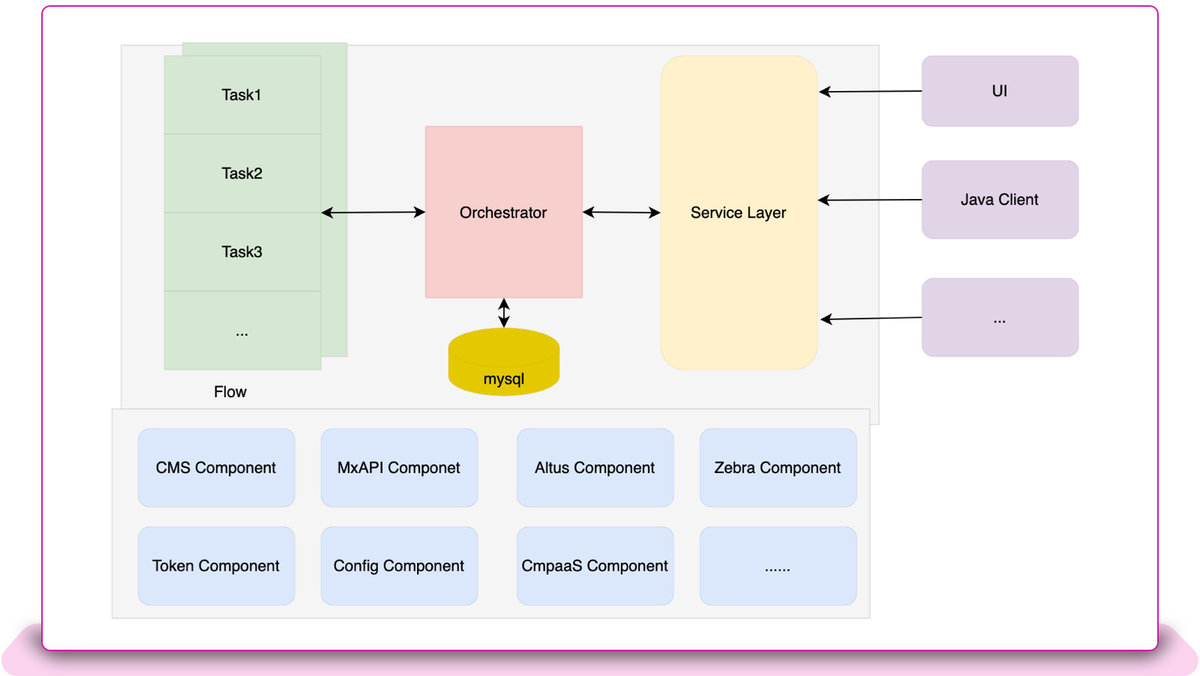

Based on the above, we proposed an architecture like the below diagram.

There are two main parts in this architecture:

-

Orchestrator: It resolves corresponding flow with predefined tasks based on input. Each flow can be split into smaller tasks which can run sequentially by configuration. For example, orchestrator can resolve one specific flow to support service application migration and can resolve the other flow to support batch application migration as well. After that, the orchestrator will trigger each task defined in flow by sequence, log the task run status and handle the exception until all the tasks in the flow are completed. In front of the orchestrator, there is a service layer to provide an API to invoke the orchestrator.

-

Pluggable Pipeline: it’s designed to be flexible, in order to adapt new customized components. A typical component will encapsulate the logic to complete some job by getting data from the dependency system. A task as a runnable unit can cherry-pick any components to easily get data from the ecosystem to complete the specific job.

Flow Execution

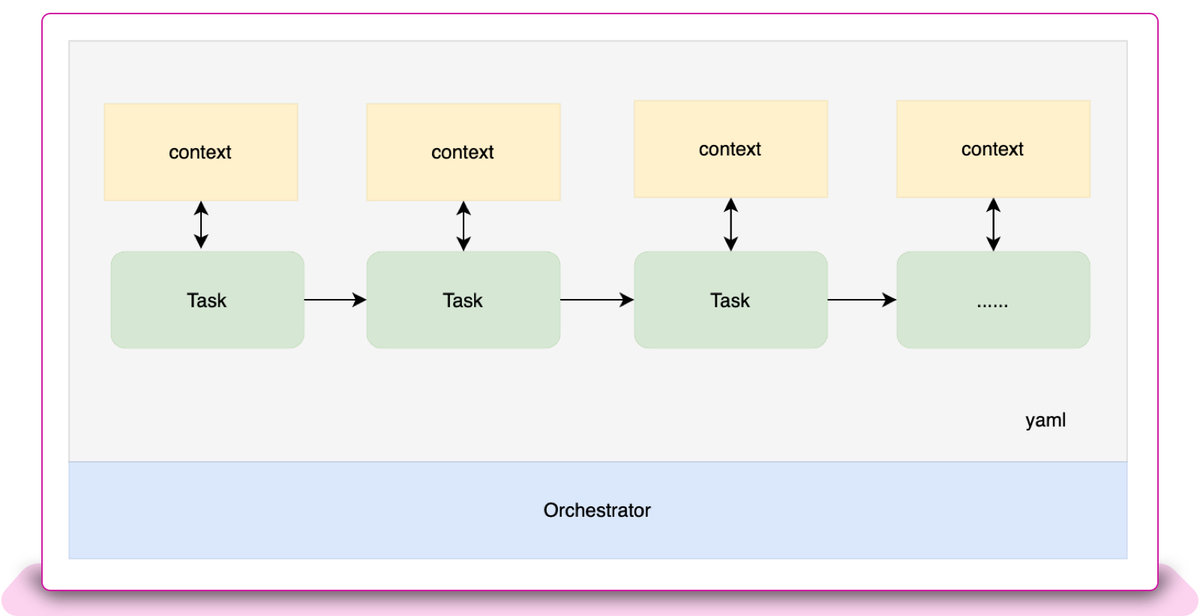

For different stacks of applications (Messaging, Batch, Service, Web), platform evaluation requires a specific flow with different task definitions. For example, service application traffic migration is not the same as the batch application (HTTP based request migration versus batch job migration). The flow is defined as yaml files with task class.

Task Context

To run a flow, the task context is persistent by the orchestrator and restored before the next task run. By using this method, each task can be fed with the necessary parameters to run independently. Another benefit: each task can be retried based on the context persisted by the previous run.

Orchestrator

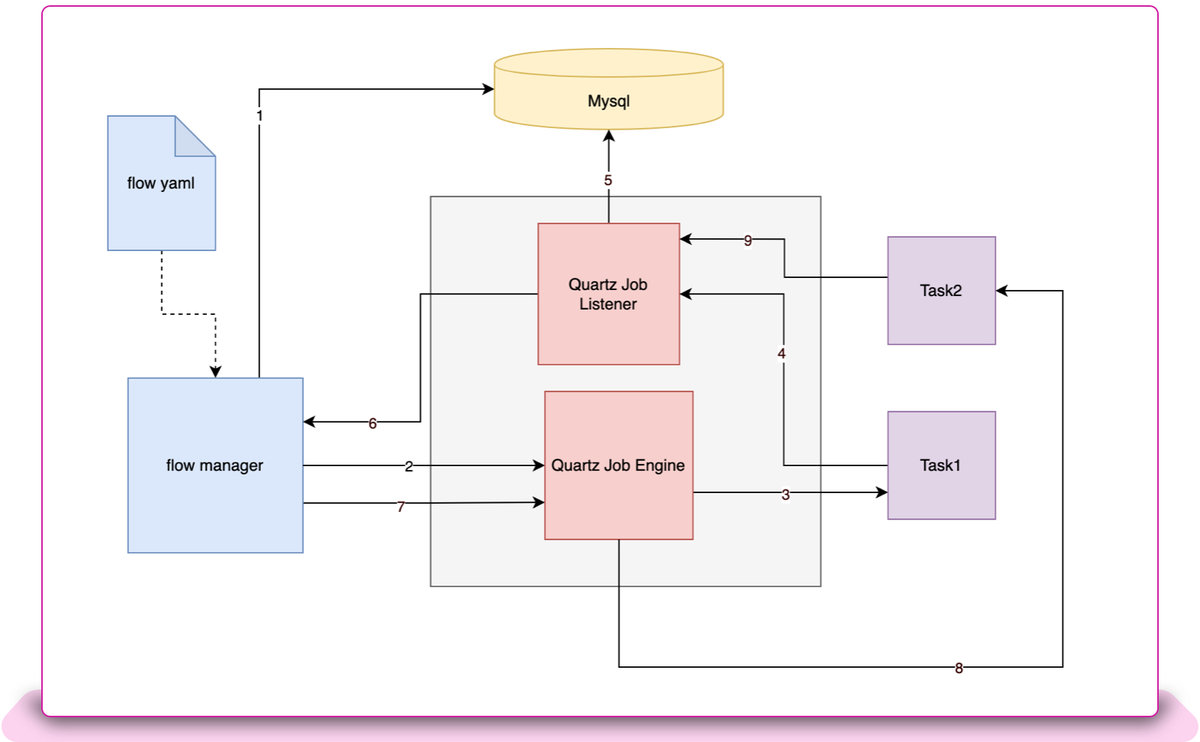

The orchestrator will sequentially process the task. There are several components inside the orchestrator:

-

Flow and tasks are defined as yaml files.

-

Flow manger handles the main logic.

-

Open source Quartz framework is responsible for triggering the tasks.

-

Mysql is used for saving flow, task status and context.

Quartz as a task engine is selected to execute tasks in the orchestrator. Why did we make this choice? Well:

-

Quartz is a distributed system which can utilize the compute resource as much as possible;

-

Quartz can persist the job status and support to pause and resume job;

-

Quartz has a job listener to decouple Quartz jobs and post action.

Here is the working mechanism inside the orchestrator.

During the orchestrator’s start-up, the flow manager will preload the flow definitions and enable task listeners which can orchestrate task run.

When the flow manager gets a request to process a flow, the following steps show how to run tasks defined in the flow.

-

Store the flow into db for this request.

-

Trigger the first task of the flow with Quartz job engine.

-

Quartz engine will find a box to trigger the Task1.

-

Once Task1 is done, it will trigger the Quartz job listener as a callback.

-

The job listener will persist the complete task with context and find the next task for stored flow.

-

If it has a next task (Task2), the task listener call flow manager to submit Task2.

-

Flow manager submit Task2 to Quartz Job Engine.

-

Quartz Job Engine triggers Task2 to run.

-

Iterate the process until the end.

Also, based on the above normal running logic, the flow manager supports the ability to retry from a task, or to skip specific tasks.

Summary

The common automation solution shows how convenient and effective it has been to set up an specific automation pipeline to support platform evolution related programs in eBay.

For platform evolution programs like legacy framework migration, site-wide upgrade and cloud migration, the tasks and components are fully reused and generic tasks can be hardened that can be shared in different flows. Those tasks and components as reusable assets will greatly save the development effort. As a benefit, a fully functional automation flow can be quickly defined by combining different tasks into one flow.

This pipeline-based common automation solution can be extended to be more generic; it can support cases that need to be done step-by-step, with verification and monitoring for each step.

It plays an important role in platform evolution, and will become a key capability of our platform to support all similar scenarios like infrastructure upgrade and framework upgrade.