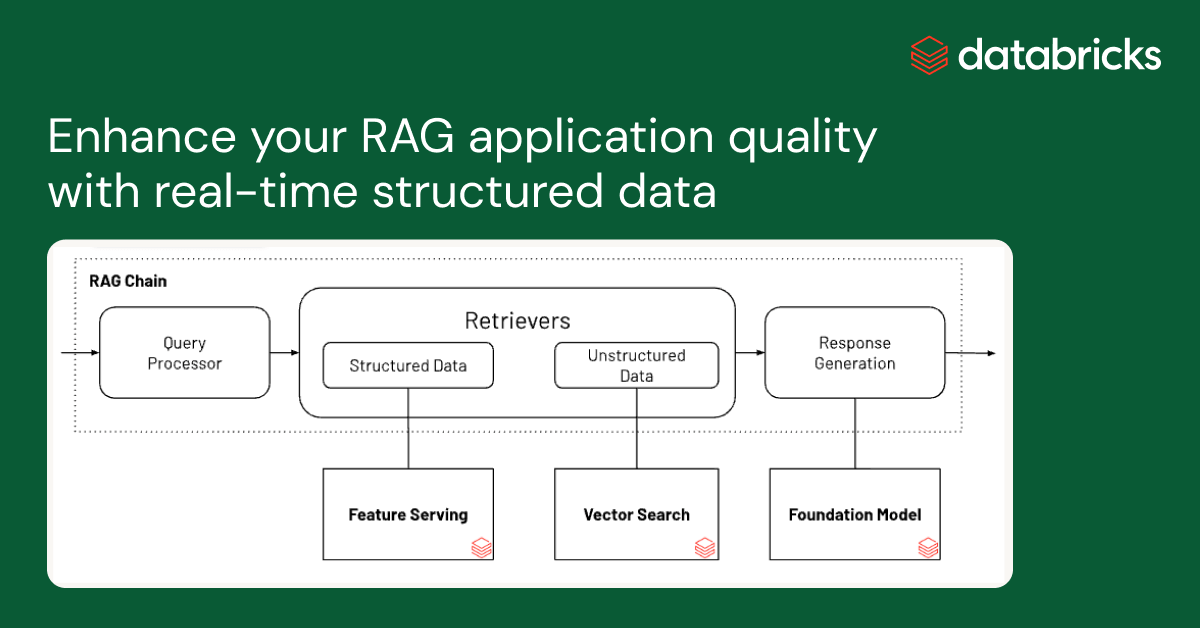

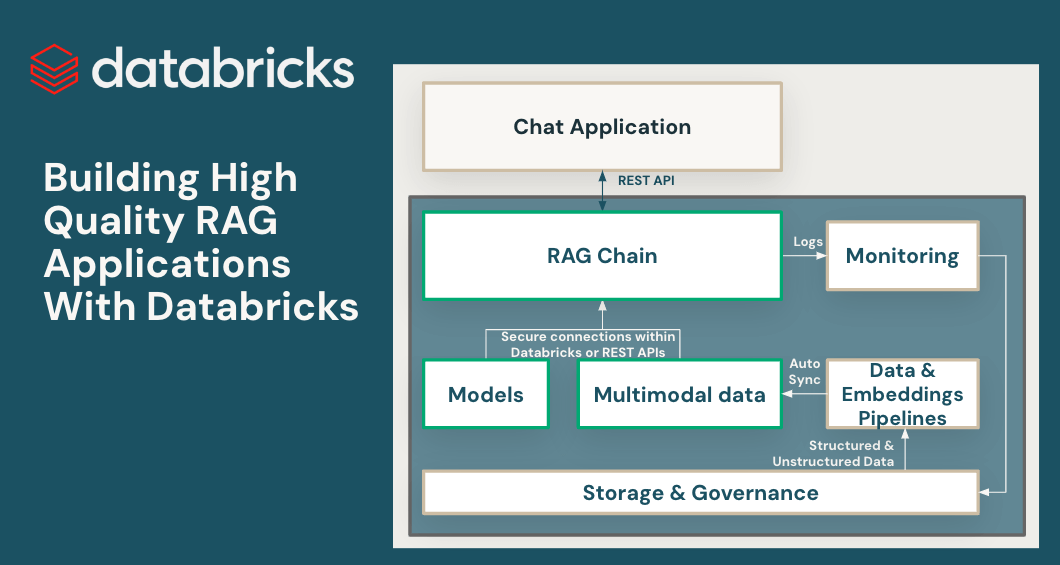

Improve your RAG application response quality with real-time structured data

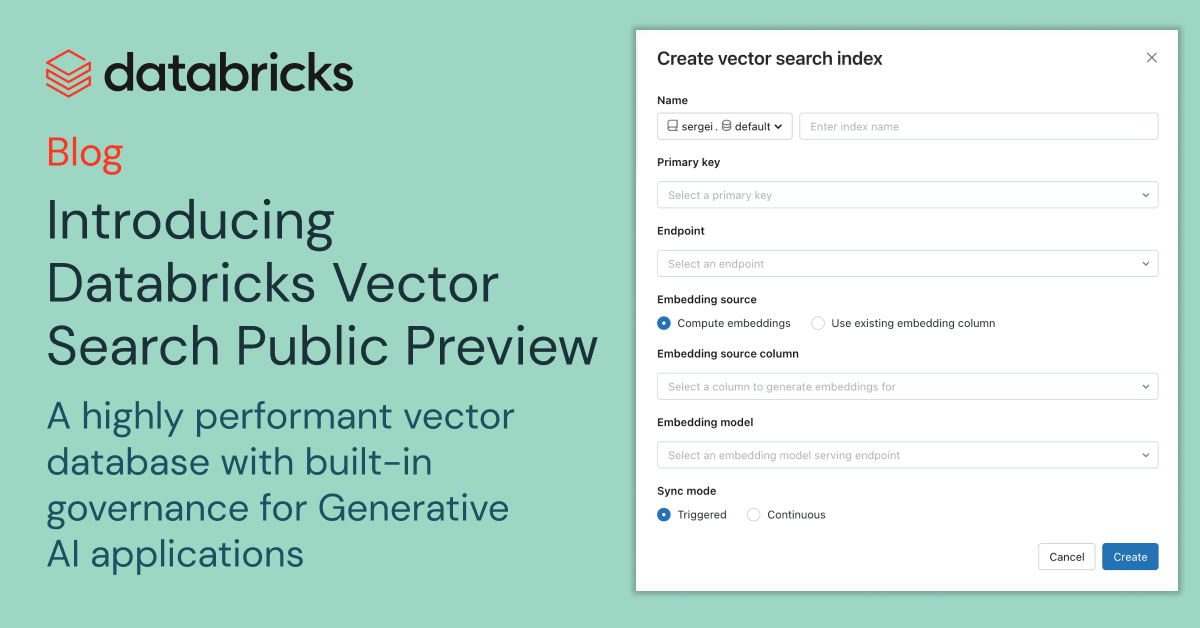

Retrieval Augmented Generation (RAG) is an efficient mechanism to provide relevant data as context in Gen AI applications. Most RAG applications typically use vector indexes to search for relevant context from unstructured data such as documentation, wikis, and support tickets. Yesterday, we announced Databricks Vector Search Public Preview that helps with exactly that. However, Gen […]

Continue Reading