Enhancing DLT development experience is a core focus because it directly impacts the efficiency and satisfaction of developers building data pipelines with DLT. We are excited to announce some enhancements to the DLT development experience with notebooks. These new features provide a seamless and intuitive DLT development interface, and help you build and debug your pipelines quickly and efficiently.

Delta Live Tables (DLT) is an innovative framework that simplifies and accelerates the building, testing, and maintaining of reliable data pipelines. It offers declarative data engineering and automatic pipeline management, enabling users to focus on defining business logic while it handles dependency tracking, error recovery, and monitoring. This powerful tool is a game changer for organizations aiming to optimize their data operations with efficiency and precision, ensuring data scientists and analysts always have access to up-to-date, high-quality data.

With this new release, we are bringing exciting new features to the experience of developing DLT with notebooks:

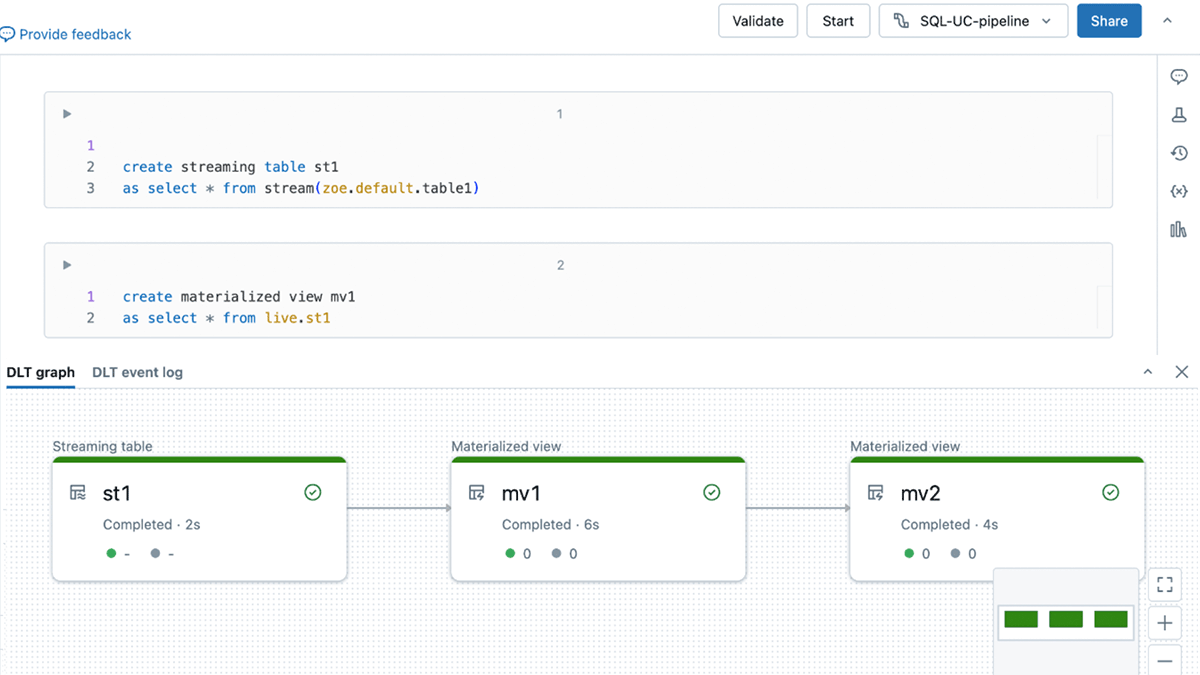

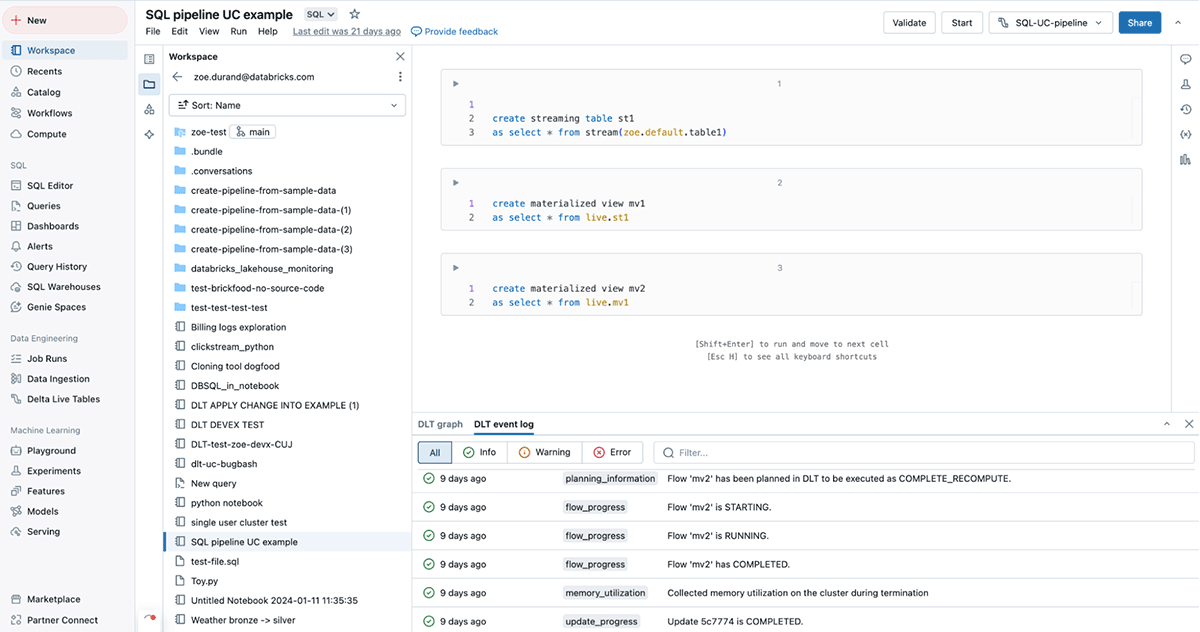

- No more context switching: see the DLT graph, the event log and notebook code in one single contextual UI.

- Quickly find syntax errors with the new “Validate” action.

- Develop code more easily with DLT-specific autocomplete, in-line errors, and diagnostics.

No more context switching: develop your DLT pipelines in one single contextual UI

You may now “connect” to your DLT pipeline directly from the notebook, as you would to a SQL Warehouse or an Interactive cluster.

Once connected to your DLT pipeline, you have access to a new all-in-one UI. You can see the DLT graph (also referred to as the Directed Acyclic Graph or “DAG”) and the DLT event log in the same UI as the code you are editing.

With this new all-in-one UI, you can do everything you need to do without switching tabs! You can check the shape of the DLT graph and the schema of each table as you are developing, to make sure that you are getting the results that you want. You can also check the event log for any errors that arise during the development process.

This greatly improves the usability and ergonomics of developing a DLT pipeline.

Catch errors faster and easily develop DLT code

1. Catch syntax errors quickly with “Validate”

We are introducing a “Validate” action for DLT pipelines, alongside “start” and “full refresh”.

With “Validate”, you can check for problems in a pipeline’s source code without processing any data. This feature will allow you to iteratively find and fix errors in your pipeline, such as incorrect table or column names, when you are developing or testing pipelines.

“Validate” is available as a button in the notebook UI, and will also execute when hitting the “shift+enter” keyboard shortcut.

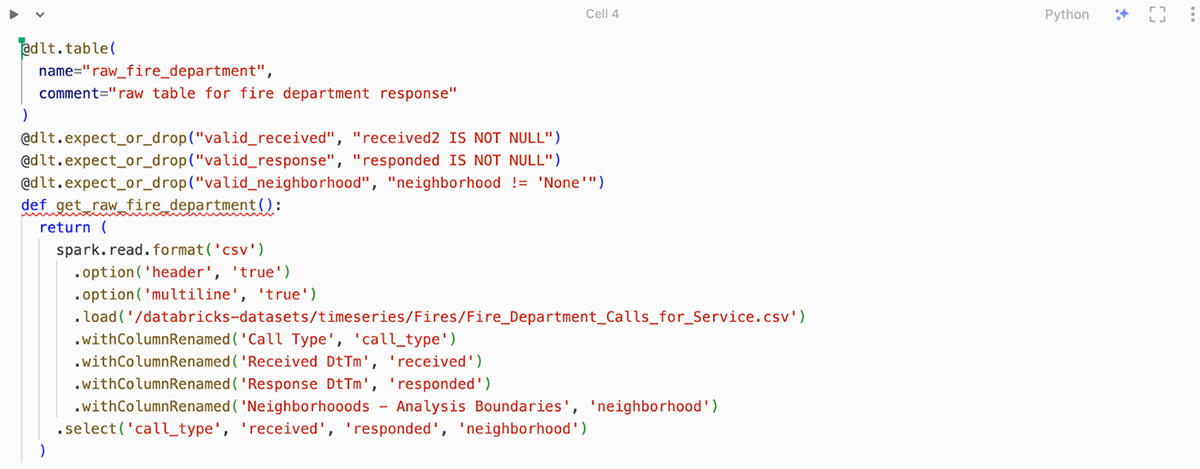

2. Develop your code more easily with DLT-aware autocomplete, in-line errors and diagnostics

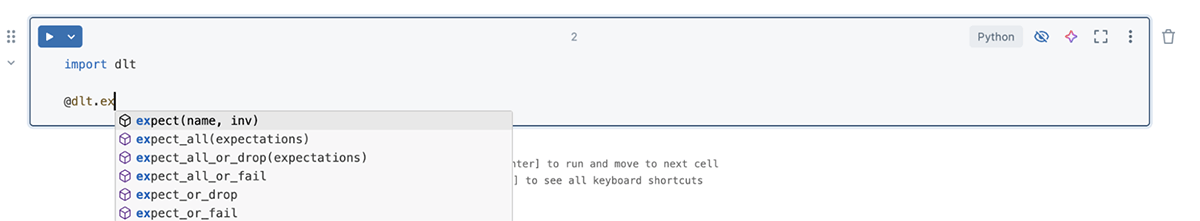

You can now access DLT-specific auto-complete, which makes writing code faster and more accurate.

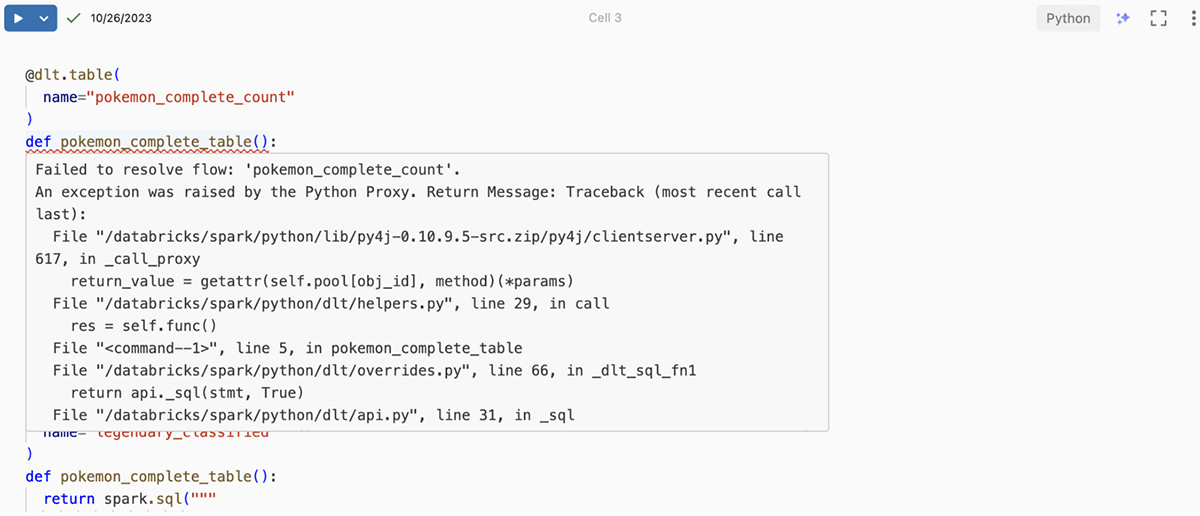

Additionally, you can easily identify syntax errors with red squiggly lines that highlight the exact error location within your code.

Finally, you can benefit from the inline diagnostic box, which displays relevant error details and suggestions right at the pertinent line number. Hover over an error to see more information:

How to get started?

Just create a DLT pipeline, a notebook, and connect to your pipeline from the compute drop-down. You can try these new notebook capabilities across Azure, AWS & GCP.