LLM Training and Inference with Intel Gaudi 2 AI Accelerators

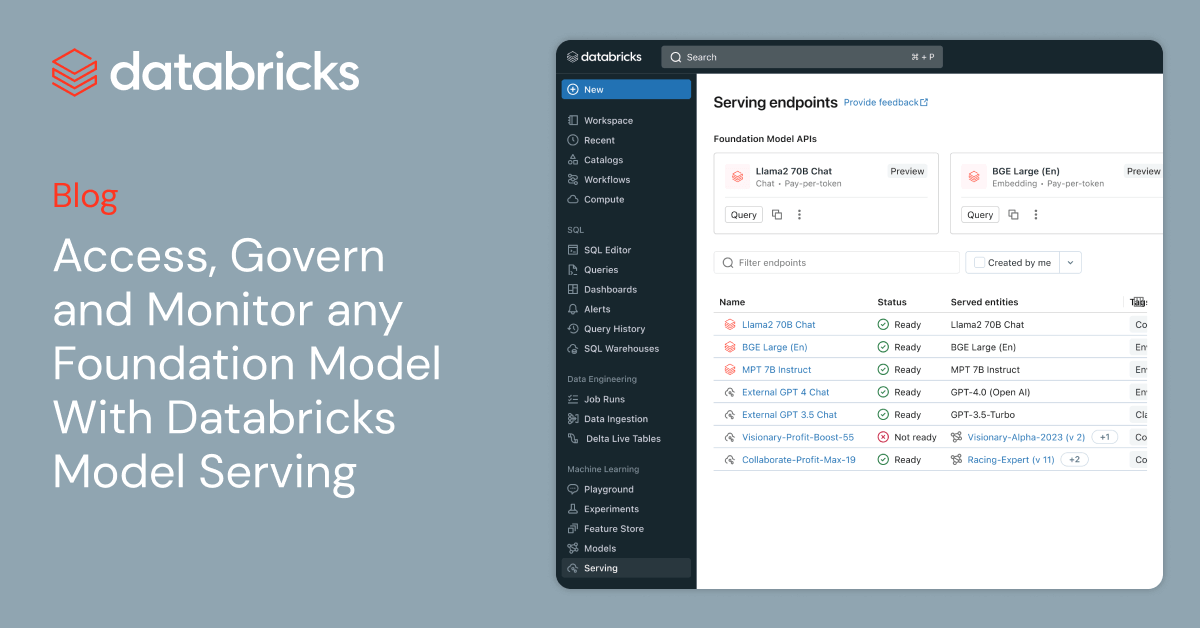

At Databricks, we want to help our customers build and deploy generative AI applications on their own data without sacrificing data privacy or control. For customers who want to train a custom AI model, we help them do so easily, efficiently, and at a low cost. One lever we have to address this challenge is […]

Continue Reading