Delta Lake has over 20M+ monthly downloads. BigQuery, now with first-party support for Delta Lake, builds on Delta’s rich connector ecosystem and seamlessly integrates with Databricks. In this blog, we will cover:

- Delta Lake on Google Cloud

- Building an open data lakehouse with Databricks and BigQuery

- How to read Delta Lake in BigQuery

Delta Lake on Google Cloud

Delta Lake is an optimized storage layer, enhancing performance and reliability for enterprise data lakes. Delta is used by over 10,000 companies, including more than 60% of the Fortune 500. As a fully open sourced Linux Foundation project, Delta Lake offers a rich connector ecosystem with support from many popular open source frameworks and commercial engines. BigQuery now offers integrated Delta Lake support, extending the Delta Lake ecosystem to Google Cloud.

With BigQuery support, you can write Delta and continue to access Google Cloud native services downstream, all from a single copy of data. BigQuery’s Delta connector includes support for recent Delta innovations such as deletion vectors, column mapping, and liquid clustering.

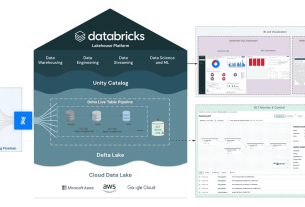

Lakehouse on Databricks and BigQuery

The lakehouse architecture combines the flexibility of data lakes with the reliability of data warehouses. BigQuery support for Delta Lake is enabled through BigLake. BigLake is a storage engine that enables customers to store data in an open table format on cloud object storage, providing the flexibility to use BigQuery with other platforms like Databricks. Customers can converge their data warehouses and data lakes on a unified storage layer, using Delta Lake and BigLake.

By standardizing your data lake in Delta Lake, you can:

- Unify data access: Maintain a single authoritative copy of your data that can be queried by both Databricks and BigQuery without the need to export, copy, or use manifest files

- Efficiently share data: Share data seamlessly across different processing engines like BigQuery, Databricks, Dataproc, and Dataflow, enabling efficient data utilization and collaboration

“Google Cloud is committed to fostering an open and interoperable data ecosystem,” said Ritika Suri, Director, Data and AI Technology Partnerships at Google Cloud. “Adding support for Delta Lake in BigQuery is a testament to our dedication to delivering an open platform with a comprehensive set of cloud solutions for managing their data.”

Reading Delta Lake in BigQuery

You can read Delta Lake in BigQuery with just a few easy steps. To start, let’s create a Delta table in Databricks:

CREATE TABLE main.default.DeltaLake_demo

LOCATION 'gs://mybucket/mydata/mytable/'

AS (SELECT * FROM samples.nyctaxi.trips );Before you can access the table in BigQuery, you need a Cloud resource connection to Cloud Storage and the required permissions in BigQuery. You create a Delta Lake table in BigQuery specifying the Delta Lake prefix as the URI:

CREATE EXTERNAL TABLE myProject.dataset.DeltaLake_demo

WITH CONNECTION `myProject.us.myConnection`

OPTIONS (

format ="DELTA_LAKE",

uris = ["gs://mybucket/mydata/mytable/"]

)When you query a Delta table, BigQuery reads data under the prefix to identify the current version of the table. BigQuery automatically detects data and schema changes, so you can read the latest snapshot without manually refreshing table metadata.

SELECT * FROM myProject.dataset.DeltaLake_demoReading Delta Lake in BigQuery is that simple. With Delta Lake, you can use both Databricks and BigQuery without duplicating data files or manually maintaining table metadata, while also leveraging the latest Delta features.

At Databricks, we are excited to enable open access to enterprise data through Delta Lake. We will continue to invest in our partnership with Google Cloud to help customers integrate Databricks with BigQuery and other Google Cloud services.

You can learn more about Delta Lake and our partnership with Google Cloud at upcoming sessions at Data and AI Summit from June 10-13, 2024. Sessions are live in San Francisco and virtual in a hybrid format.