Yes to the “ICE Out of Our Faces Act”

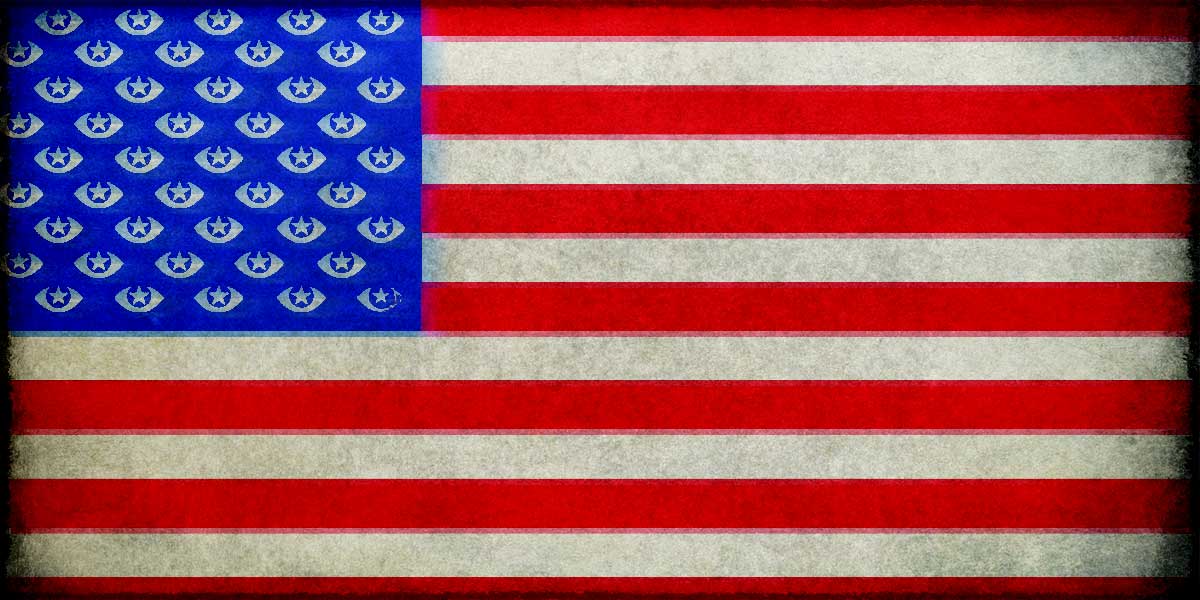

Immigration and Customs Enforcement (ICE) and Customs and Border Protection (CBP) have descended into utter lawlessness, most recently in Minnesota. The violence is shocking. So are the intrusions on digital rights and civil liberties. For example, immigration agents are routinely scanning faces of people they suspect of unlawful presence in the country – 100,000 times, […]

Continue Reading